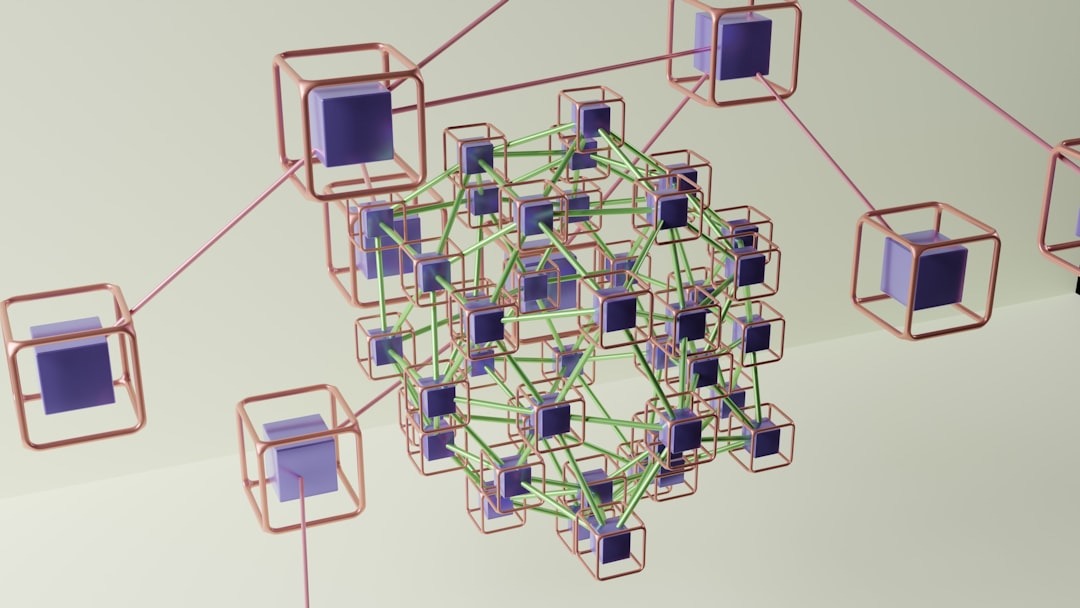

Multithreading is a programming paradigm that allows multiple threads to exist within the context of a single process, enabling concurrent execution of tasks. Each thread represents a separate path of execution, which can run independently while sharing the same memory space. This capability is particularly advantageous in modern computing environments where multi-core processors are prevalent, as it allows for more efficient utilization of CPU resources.

By dividing a program into smaller, manageable threads, developers can enhance performance and responsiveness, especially in applications that require significant computational power or need to handle multiple tasks simultaneously. The concept of multithreading is rooted in the need for improved performance and responsiveness in software applications. For instance, in a graphical user interface (GUI) application, a single-threaded approach may lead to unresponsive behavior when the main thread is busy processing data.

In contrast, by employing multithreading, the application can continue to respond to user inputs while performing background tasks. This separation of concerns not only enhances user experience but also allows for more complex operations to be executed without hindering the overall functionality of the application.

Key Takeaways

- Multithreading allows multiple threads to execute concurrently, improving performance and responsiveness in software applications.

- Benefits of multithreading include improved resource utilization, better responsiveness, and enhanced user experience.

- Best practices for multithreading include avoiding race conditions, using thread-safe data structures, and minimizing thread contention.

- Common challenges and pitfalls in multithreading include deadlocks, thread starvation, and difficulty in debugging and testing.

- Tools and techniques for optimizing multithreading performance include profiling tools, thread pooling, and asynchronous programming.

- Multithreading is supported in various programming languages such as Java, C++, and Python, each with its own threading models and libraries.

- Multithreading is widely used in real-world applications such as web servers, video streaming, and gaming to handle concurrent tasks and improve performance.

- Future trends in multithreading technology include advancements in parallel processing, improved support for multicore processors, and better tools for debugging and analyzing multithreaded applications.

Benefits of Multithreading

One of the primary benefits of multithreading is improved application performance. By executing multiple threads concurrently, programs can leverage the full capabilities of multi-core processors, leading to faster execution times for computationally intensive tasks. For example, in data processing applications, such as those used in big data analytics, multithreading can significantly reduce the time required to process large datasets by distributing the workload across multiple threads.

Another significant advantage of multithreading is enhanced responsiveness in applications. In scenarios where user interaction is critical, such as in web browsers or mobile applications, multithreading allows background tasks to run without freezing the user interface.

For instance, when a user uploads a file or performs a search, the application can continue to respond to other user actions while processing these tasks in separate threads. This leads to a smoother user experience and can increase user satisfaction and engagement with the application.

Best Practices for Multithreading

To effectively implement multithreading, developers should adhere to several best practices that can help mitigate potential issues and enhance performance. One crucial practice is to minimize shared resources among threads. When multiple threads access shared data simultaneously, it can lead to race conditions and data inconsistencies.

To avoid these pitfalls, developers can use synchronization mechanisms such as mutexes or semaphores to control access to shared resources. By carefully managing how threads interact with shared data, developers can ensure that their applications remain stable and reliable. Another important best practice is to keep threads lightweight and focused on specific tasks.

Creating too many threads can lead to overhead that negates the benefits of multithreading. Instead, developers should aim to create a manageable number of threads that are optimized for their specific tasks. For example, using thread pools can help manage thread creation and destruction efficiently, allowing for better resource utilization.

By reusing existing threads for new tasks rather than constantly creating and destroying them, applications can achieve better performance and lower latency.

Common Challenges and Pitfalls

| Challenges and Pitfalls | Impact | Solution |

|---|---|---|

| Lack of communication | Confusion and misunderstandings | Regular team meetings and clear communication channels |

| Scope creep | Project delays and increased costs | Define project scope clearly and stick to it |

| Poor risk management | Unexpected issues and setbacks | Identify and assess risks early, and develop mitigation plans |

| Unclear goals and objectives | Low motivation and direction | Set SMART goals and communicate them to the team |

Despite its advantages, multithreading comes with its own set of challenges and pitfalls that developers must navigate. One common issue is deadlock, which occurs when two or more threads are waiting indefinitely for resources held by each other. This situation can lead to a complete halt in application execution if not handled properly.

To prevent deadlocks, developers should implement strategies such as resource ordering or using timeout mechanisms when acquiring locks. Another challenge associated with multithreading is debugging and testing. The non-deterministic nature of thread execution can make it difficult to reproduce bugs consistently.

Issues such as race conditions may only manifest under specific timing conditions, complicating the debugging process. To address this challenge, developers can employ specialized debugging tools designed for multithreaded applications or use logging techniques to trace thread activity and identify problematic areas in the code.

Tools and Techniques for Optimizing Multithreading Performance

Optimizing multithreading performance requires a combination of tools and techniques tailored to the specific needs of an application. Profiling tools are essential for identifying bottlenecks in multithreaded applications. These tools analyze thread performance and resource usage, allowing developers to pinpoint areas where optimization is needed.

For instance, tools like Visual Studio’s Performance Profiler or Intel VTune Amplifier provide insights into thread execution times and CPU utilization, helping developers make informed decisions about where to focus their optimization efforts. In addition to profiling tools, developers can utilize various programming constructs designed to enhance multithreading performance. For example, using concurrent data structures such as concurrent queues or hash maps can help reduce contention among threads when accessing shared data.

These data structures are designed to handle concurrent access efficiently, minimizing the need for explicit locking mechanisms that can introduce overhead. By leveraging these specialized constructs, developers can improve both performance and scalability in their multithreaded applications.

Multithreading in Different Programming Languages

Multithreading support varies significantly across programming languages, each offering unique features and paradigms for managing concurrent execution. In Java, for instance, multithreading is built into the language through the Thread class and the Runnable interface. Java’s concurrency utilities also provide higher-level abstractions such as ExecutorService and ForkJoinPool, which simplify thread management and task execution.

In contrast, languages like Python have a Global Interpreter Lock (GIL) that restricts true parallel execution of threads within a single process. While Python supports multithreading through the threading module, its effectiveness is often limited by the GIL when it comes to CPU-bound tasks. However, Python’s multiprocessing module allows developers to bypass this limitation by spawning separate processes instead of threads, enabling true parallelism at the cost of increased memory usage.

Multithreading in Real-world Applications

Multithreading finds extensive application across various domains, from web servers handling multiple client requests simultaneously to scientific computing applications performing complex simulations. For example, web servers like Apache or Nginx utilize multithreading to manage numerous incoming requests concurrently, ensuring that users receive timely responses regardless of server load. Each request can be processed in its own thread or handled by a thread pool, allowing for efficient resource management and improved throughput.

In scientific computing, multithreading plays a crucial role in simulations that require extensive calculations over large datasets. Applications such as computational fluid dynamics (CFD) or molecular dynamics simulations often leverage multithreading to distribute calculations across multiple cores. This parallel processing capability significantly reduces simulation times and enables researchers to explore complex phenomena more efficiently.

Future Trends in Multithreading Technology

As technology continues to evolve, so too does the landscape of multithreading and concurrent programming. One notable trend is the increasing adoption of asynchronous programming models that complement traditional multithreading approaches. Asynchronous programming allows developers to write non-blocking code that can handle I/O-bound operations more efficiently without relying on multiple threads.

This paradigm shift is particularly evident in web development frameworks like Node.js, which utilize an event-driven architecture to manage concurrent operations.

As these technologies become more prevalent, there will be a growing need for efficient parallel processing capabilities to handle large-scale data analysis and model training tasks.

Frameworks like TensorFlow and PyTorch already support distributed computing across multiple threads and devices, paving the way for more sophisticated applications that leverage both multithreading and machine learning techniques. In conclusion, multithreading remains a vital aspect of modern software development, offering significant benefits in terms of performance and responsiveness while presenting unique challenges that require careful management. As programming languages evolve and new paradigms emerge, the future of multithreading technology promises exciting advancements that will continue to shape how developers approach concurrent programming.

Multithreading is a powerful concept in computer science that allows multiple threads to be executed concurrently, improving the efficiency and performance of applications. While multithreading is primarily a technical topic, its implications can extend into various fields, including sociology. Understanding how different threads of execution can interact and influence each other can provide insights into complex social systems and behaviors. For a broader perspective on how different disciplines intersect, you might find it interesting to explore the article on sociology and its relationship with other social sciences. You can read more about it in this related article.

+ There are no comments

Add yours