Parallel computing is a computational paradigm that enables the simultaneous execution of multiple calculations or processes. This approach is particularly beneficial for tasks that can be divided into smaller, independent subtasks, allowing for significant reductions in processing time. The fundamental principle behind parallel computing is the division of a problem into smaller parts that can be solved concurrently, leveraging multiple processors or cores.

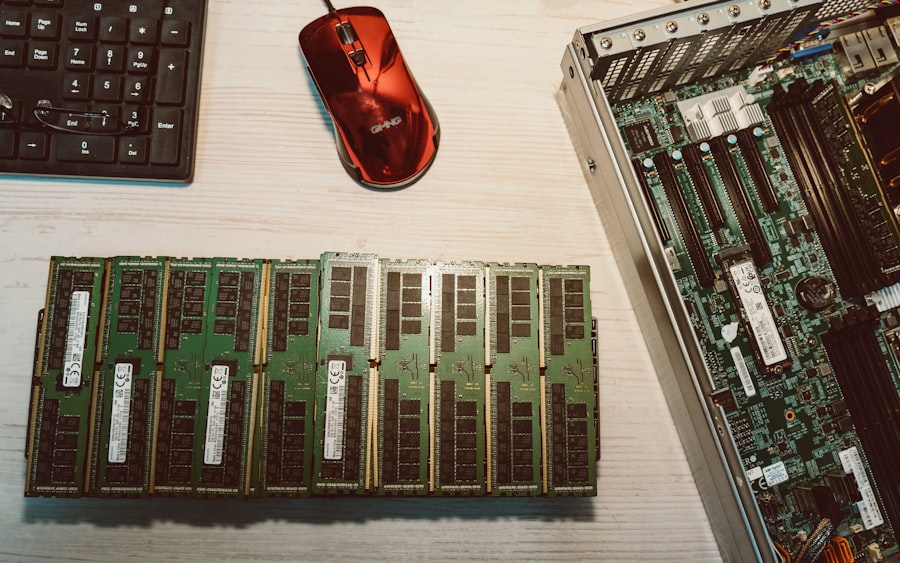

This is in stark contrast to traditional serial computing, where tasks are executed sequentially, one after the other. The architecture of parallel computing systems can vary widely, ranging from multi-core processors in personal computers to large-scale supercomputers that consist of thousands of interconnected nodes. Each node may contain multiple processors, and these processors can work together to solve complex problems more efficiently than a single processor could.

For instance, in scientific simulations, such as climate modeling or molecular dynamics, the ability to perform calculations in parallel allows researchers to explore vast datasets and intricate models that would be infeasible with serial processing alone. The evolution of parallel computing has been driven by the increasing demand for computational power across various fields, including engineering, finance, and artificial intelligence.

Key Takeaways

- Parallel computing enhances processing speed by dividing tasks across multiple processors.

- Selecting an appropriate parallel computing model is crucial for efficient application performance.

- Algorithm optimization is essential to fully leverage parallel computing capabilities.

- Parallel computing significantly improves data analysis and machine learning workloads.

- Addressing challenges like synchronization and scalability is key to successful parallel computing implementation.

Choosing the Right Parallel Computing Model

Selecting an appropriate parallel computing model is crucial for maximizing performance and efficiency. There are several models to consider, each with its own strengths and weaknesses. One of the most common models is the shared memory model, where multiple processors access a common memory space.

This model simplifies communication between processors but can lead to contention issues as multiple threads attempt to read from and write to the same memory locations simultaneously. Another prevalent model is the distributed memory model, where each processor has its own local memory and communicates with others through message passing. This model scales well for large systems and is often used in high-performance computing environments.

For example, the Message Passing Interface (MPI) is a widely adopted standard for implementing distributed memory systems, allowing for efficient communication between nodes in a cluster. When choosing a model, it is essential to consider factors such as the nature of the problem, the available hardware, and the expected scalability. A well-chosen model can significantly enhance performance and reduce execution time.

Optimizing Algorithms for Parallel Computing

Optimizing algorithms for parallel computing involves rethinking traditional approaches to problem-solving to take full advantage of concurrent execution. This process often requires decomposing algorithms into smaller tasks that can be executed independently. For instance, in sorting algorithms, techniques like merge sort can be adapted for parallel execution by dividing the dataset into smaller chunks that are sorted concurrently before merging the results.

Moreover, load balancing is a critical aspect of optimization in parallel computing. It ensures that all processors are utilized effectively without any single processor becoming a bottleneck due to an uneven distribution of work. Techniques such as dynamic scheduling can help distribute tasks more evenly among processors during runtime, adapting to variations in processing speed and workload.

Additionally, minimizing communication overhead between processors is vital; excessive communication can negate the benefits of parallelism. By carefully designing algorithms that reduce inter-process communication and optimize data locality, developers can achieve significant performance gains.

Utilizing Parallel Computing in Data Analysis

| Metric | Description | Typical Value | Impact on Data Analysis |

|---|---|---|---|

| Speedup | Ratio of time taken by serial execution to parallel execution | 2x – 50x | Reduces total computation time significantly |

| Scalability | Ability to maintain efficiency as number of processors increases | Good scalability up to 64 cores | Enables handling larger datasets efficiently |

| Efficiency | Speedup divided by number of processors | 60% – 90% | Indicates resource utilization effectiveness |

| Latency | Delay before computation starts due to overhead | Milliseconds to seconds | Can affect performance for small datasets |

| Throughput | Amount of data processed per unit time | Gigabytes per second | Higher throughput improves data processing capacity |

| Load Balancing | Distribution of work evenly across processors | Varies by algorithm | Prevents bottlenecks and maximizes speedup |

| Memory Bandwidth | Rate at which data can be read/written to memory | 10 – 100 GB/s | Critical for data-intensive parallel tasks |

Data analysis has become increasingly reliant on parallel computing due to the exponential growth of data generated across various domains. Traditional data processing methods often struggle to keep pace with this influx of information, making parallel computing an essential tool for data scientists and analysts. For example, frameworks like Apache Spark leverage parallel processing to handle large-scale data analysis tasks efficiently.

Spark’s ability to distribute data across a cluster allows for rapid processing of big data workloads, enabling real-time analytics and insights. In addition to frameworks like Spark, parallel computing can enhance statistical analysis and machine learning tasks. Techniques such as cross-validation in machine learning can be parallelized by splitting the dataset into subsets and training models concurrently on each subset.

This not only speeds up the training process but also allows for more robust model evaluation by leveraging multiple training runs simultaneously. As organizations increasingly rely on data-driven decision-making, the integration of parallel computing into data analysis workflows becomes indispensable for extracting actionable insights from vast datasets.

Implementing Parallel Computing in Machine Learning

The implementation of parallel computing in machine learning has transformed how models are trained and deployed. With the rise of deep learning, which often involves training complex neural networks on massive datasets, parallel computing has become a necessity rather than an option. Frameworks such as TensorFlow and PyTorch provide built-in support for distributed training across multiple GPUs or even entire clusters of machines.

This capability allows researchers and practitioners to significantly reduce training times while improving model performance. One notable example is the use of data parallelism in training deep learning models. In this approach, the dataset is divided into smaller batches that are processed simultaneously across different processors or GPUs.

Each processor computes gradients based on its subset of data, and these gradients are then aggregated to update the model parameters. This method not only accelerates training but also enhances scalability as larger datasets can be handled more efficiently. Furthermore, techniques like model parallelism allow for distributing different parts of a neural network across multiple devices, enabling the training of larger models that would otherwise exceed memory limitations on a single device.

Overcoming Challenges in Parallel Computing

Despite its advantages, parallel computing presents several challenges that must be addressed to fully realize its potential. One significant challenge is synchronization among processes or threads. When multiple processes need to access shared resources or data structures, ensuring consistency and preventing race conditions becomes critical.

Techniques such as locks, semaphores, and barriers are commonly employed to manage synchronization; however, they can introduce overhead that diminishes performance gains from parallelism. Another challenge lies in debugging and profiling parallel applications. Traditional debugging tools may not effectively handle concurrent processes, making it difficult to identify issues such as deadlocks or performance bottlenecks.

Specialized tools designed for parallel environments are necessary to provide insights into process interactions and resource usage. Additionally, developers must be adept at understanding the underlying hardware architecture to optimize their applications effectively; this includes knowledge of cache hierarchies, memory bandwidth, and interconnects between processors.

Measuring Performance and Scalability in Parallel Computing

Evaluating the performance and scalability of parallel computing systems is essential for understanding their effectiveness in solving computational problems. Key metrics include speedup, which measures how much faster a parallel algorithm runs compared to its serial counterpart; efficiency, which assesses how well the available resources are utilized; and scalability, which examines how performance changes as more processors are added to the system. To measure these metrics accurately, benchmarking is often employed using standardized test cases that reflect real-world workloads.

Tools like LINPACK and NAS Parallel Benchmarks provide frameworks for assessing performance across various architectures and configurations. Additionally, profiling tools can help identify hotspots within code that may benefit from further optimization or refactoring.

Future Trends in Parallel Computing Technology

The landscape of parallel computing technology is continually evolving, driven by advancements in hardware and software innovations. One prominent trend is the increasing adoption of heterogeneous computing environments that combine CPUs with specialized accelerators such as GPUs and TPUs (Tensor Processing Units). These architectures allow for more efficient processing of specific workloads, particularly in fields like machine learning and scientific simulations.

Another emerging trend is the rise of quantum computing as a new paradigm for parallelism. Quantum computers leverage quantum bits (qubits) to perform calculations at unprecedented speeds for certain types of problems, such as factoring large numbers or simulating quantum systems. While still in its infancy, quantum computing holds promise for revolutionizing fields that require immense computational power.

Furthermore, advancements in cloud computing are making parallel computing resources more accessible than ever before. Cloud platforms offer scalable infrastructure that allows organizations to harness powerful parallel computing capabilities without significant upfront investment in hardware. As these technologies continue to mature, they will likely reshape how researchers and businesses approach complex computational challenges across various domains.

Parallel computing plays a crucial role in enhancing the efficiency of various computational tasks, particularly in the realm of media production.

This article discusses the complexities and innovations that parallel computing can address in modern media workflows. For more information, visit Media Processes: Production Control and Challenges in the New Media Era.

+ There are no comments

Add yours